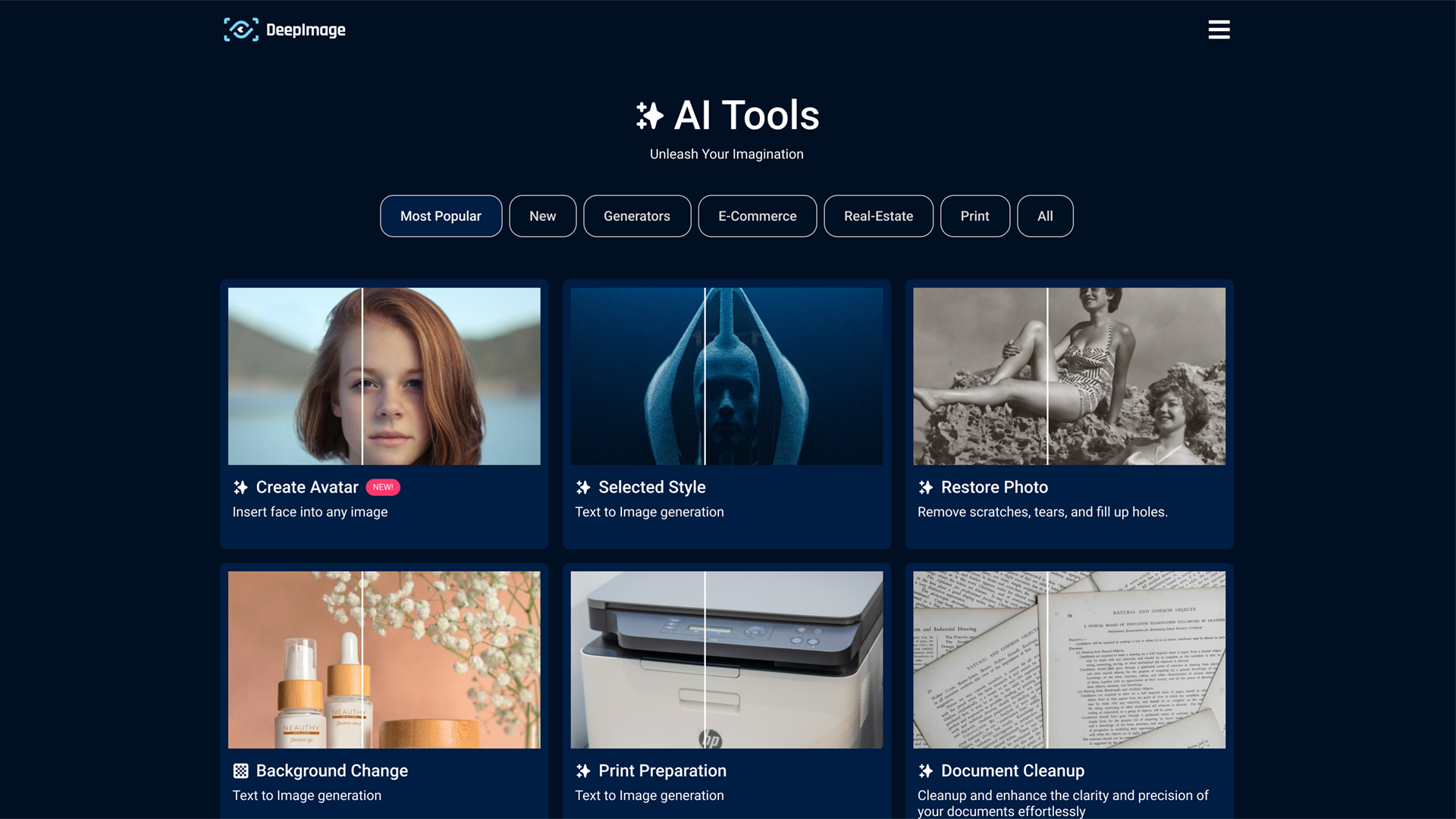

AI powered 3D model reconstruction using data from a medical device

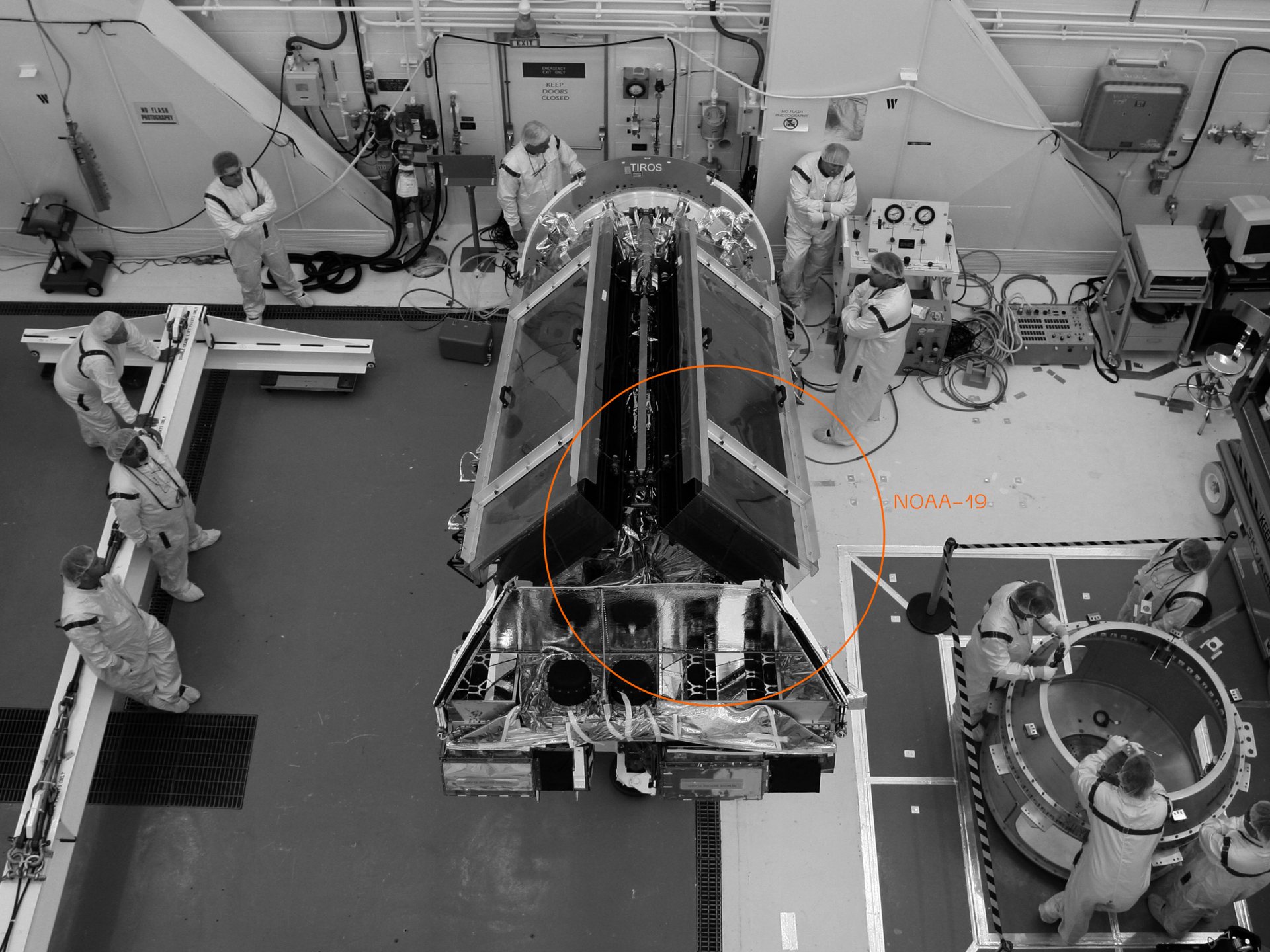

We combined skills in AI, 3D modelling and scientific research to train and fine tune selected Neural Networks to recreate high precision 3D representation based on custom optical setup.