Protecting your organization's data in the AI age

AI is here to stay and AI tools are great for business when used consciously. But it seems that this is not common knowledge and there is a lack of awareness among business people.

Most businesses in favor of comfort are building their competitors (that will be AI services that are learned based on their company data) and at the same time - paying for it with their own money (for services that they are using).

That’s a bold statement, so let’s dive deeper and try to expand this.

How AI algorithms are built?

Most AI/ML algorithms need actual data, and a lot of data to learn what the expected outcome is and iterate and iterate on those examples and data. So depending on the AI algorithm the creators need to find a lot of good data on the subject and process them to at least try to build its desired algorithm.

Most companies state that they are using publicly available data for that - which in itself is troubling as well, for example, this article has a great showcase of Midjourney, and DALL-E 3 copyright minefield:

As you see, those images were generated - but they look nearly identical to the original… I’m sure, most of the data used to train those AI models were publicly available…

Your company data is yours, right?

But the real problem is that public data (even if it’s debatable if it’s ok to use them for AI model training) is just not enough to tackle challenging AI solutions that should bring real business value in various areas.

So what is left to do? Well, as recent events show, when you are paying for a service - you are at the same time giving away your private, sometimes business-critical data to companies, to train their AI. Shocked? Here are the facts:

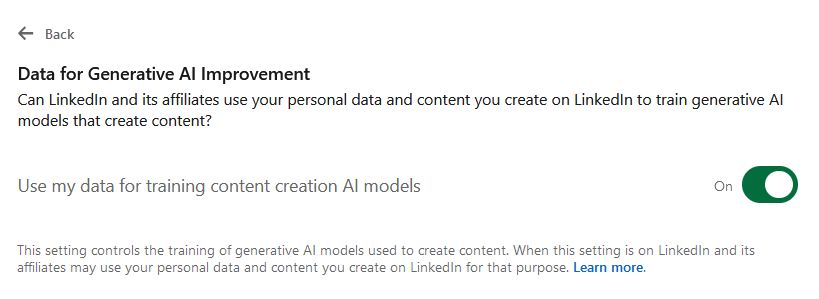

UPDATE: as of 2024-09-19, LinkedIn automatically opted everyone into training their AI. I suggest opting out right away. To opt out: Go to Settings & Privacy > Data Privacy > Data for Generative AI Improvement, and switch it off.

Zoom

Recent Zoom changes in their Terms of Service hit the news hard, here is a quote from CBS News:

In its blog post, Zoom said the company considers service-generated data “to be our data,” and experts confirm this language would allow the company to use such data for AI training without obtaining additional consent.

Notion

This example is not that shocking, but still, I’m fairly confident that most customers do not know this is happening. When analyzing Notion.so AI Terms of Service:

Artificial intelligence and machine learning models can improve over time to better address specific use cases. We may use data we collect from your use of Notion AI to improve our models when you voluntarily provide Feedback to us such as by labeling Output with a thumbs up or thumbs down; or give us your permission.

I would guess, that most users do not read these terms of service, nor do they understand that thumbs up or thumbs down is equivalent to: voluntarily sharing their data to train AI models.

DeviantArt

This article is a great summary of how DeviantArt decided that automatically your data is used by their AI tool:

DeviantArt has decided your artwork there is fair game to be used in their new AI generator by default. You have to go to an obscure form to opt-out and requires 10 days to be reviewed.

Consequence of exposing your company data to third-party services

We could analyze more companies and their terms of service, and expose more of these types of situations, but there is a general problem:

- when you provide third-party companies (using their services) with your private and company data you are at the mercy of their Terms of Service which are constantly changing and it’s sometimes impossible to track all of that and at the same time conduct business,

- those companies (according to their terms and service) may use your data to improve their algorithms - which means? If you are for example using (let’s say hypothetical service X that has AI) to write your business pitch, that data may be used to train their AI, and your competition, which is also using this service, may use AI to generate their business pitch based on your data.

How do I protect my company data?

The only way in the AI age to protect your company data is either:

- constantly analyze the Terms of Services of every SaaS/Cloud solution you use,

- build your privacy-aware services inside your company.

If you are still not convinced, I highly recommend listening to this: Tim Ferriss podcast with Derek Sivers about Derek’s core belief in protecting your data and being cloud-free movement.

Being a professional, security & privacy-aware company - this is exactly the road we chose over a decade ago - and we couldn’t be happier, as the AI age proved this to be the right solution.

Our tool stack for example is based on:

- Synology Cloud - a private cloud/file service.

- Outline - just a fantastic, easy-to-use Knowledge Base and document-sharing solution

- Matrix - (in my opinion) the best Chat solution there is!

- GitLab - for source code management and CI/CD

- and of course to connect them all (identity/SSO for one login) & protect our data behind a VPN - our enterprise open-source SSO & VPN: defguard.

Of course, as a development company, we have a lot more tools - but those should be most common (besides GitLab) and are a good base for any organization - as the main and critical service for any organization to work is: file exchange, knowledge exchange, secure chat and protecting those services with secure SSO & VPN.

So how is this relevant to you? If you would like to protect your data, be more secure - this is the first in a series of articles, where we will guide you on how to build your tools and processes, to protect your company data.

Please subscribe if you want to be notified about further articles in the series.

Robert Olejnik - Founder, Security and Open Source Advocate